Scroll Smarter: Understanding AI’s Real Impact on Social Media

Contents

We don’t really scroll anymore. We are being scrolled.

Every movement is tracked, predicted, and served back to us. Every post we see has been selected by an invisible network of algorithms designed to keep us engaged, entertained, and, most importantly, active.

Social media used to feel simple, you would follow people you cared about, see their posts in the order they appeared, and decide for yourself what to interact with. But that sense of control has slowly faded.

Nowadays, what we see online isn’t the full picture of reality, it’s a carefully personalised version of it.

As someone who has worked with social platforms for years, I’ve always been fascinated by how people engage with digital spaces. Yet, the more I learned about artificial intelligence (AI) and how it’s shaping those spaces, the more I realised that what we often treat as personal choice is, in fact, the result of systems quietly learning from us and shaping us in return.

That is why it has become more relevant than ever to understand not just what AI is doing to social media, but what that means for all of us who build, design, and use it every day.

What’s Happening Beneath the Feed

AI isn’t changing how we scroll, it’s changing why we scroll.

When feeds first became algorithmic, the goal was simple: make content discovery easier. No one wanted to miss the “best” posts, so platforms started prioritising relevance. Over the years, that evolved into something far more sophisticated and far denser.

Today, every scroll, pause, or like becomes data. That data feeds models that predict what will capture our attention next. The result is a “personalised” experience that feels seamless but comes with a trade-off: we’re no longer exploring information, we’re being guided through it.

AI doesn’t show us the world as it is, it shows us the world as it believes we’ll stay interested in.

This is the paradox of AI in social media. It promises connection but often narrows perspective. It shows us what we’re most likely to enjoy, not necessarily what we need to know.

For users, this feels subtle. For those of us who work in tech, it’s a reminder that every design choice, every algorithmic tweak, carries weight. The systems we build don’t just reflect society; they reinforce it.

The Quiet Power of Algorithms

As I prepared this talk “Scroll Smarter: What AI is really doing to Social Media” for #12 Lisbon GenAI Meetup, last September 29th, 2025, one idea kept surfacing: the illusion of choice.

Algorithms now decide whose voices rise, which stories deserve to be shown, and what ideas gain dominance. That influence isn’t always intentional nor comes necessarily from a bad place, it often comes from optimising for engagement which drive visibility, visibility drives value, and value drives curated decisions. But engagement isn’t the same as impact, and a single tweak to a recommendation system can amplify anger, deform speech, or push niche opinions into mainstream visibility, which ultimately can shift what large groups of people see and feel online.

Now, AI has learned more than just what we like. It has learned how to keep us emotionally invested, because the longer we stay, the more data it gathers and the smarter it becomes.

Over time, this creates a behavioural feedback loop. This means that more data we give it, the better it predicts what we’ll do next. When algorithms are trained to maximise attention, they naturally favour content that provokes strong reactions: surprise, anger, humour, or outrage.

It’s not manipulation in the traditional sense, it’s pattern recognition at scale. It will simply replicate what people react to.

When Help Turns into Harm

Considering that AI mirrors the systems it’s built within, this also means we must be aware of their blind spots. That’s why moderation tools sometimes fail to recognise cultural nuance or why recommendation engines might reinforce harmful stereotypes.

The machine isn’t biased on its own; it’s just a reflection of human inputs and assumptions.

This is especially visible on social media, as posts that are designed for maximum reach tend to prioritise emotion over accuracy. A well-crafted emotional headline will always outperform a complex explanation. Eventually, this can shift the balance between information and influence.

This is where ethics and design intersect and become more important than ever. As responsible AI is not only about compliance or bias reduction, it’s about the intent behind every interaction. It’s about asking whether our systems help people make informed decisions or simply keep them engaged.

Awareness is always the first step. But to make a difference, ethical thinking must be built into every phase of product design, from data collection to model training to user communication.

Transparency doesn’t just build trust, it builds understanding.

Designing with Awareness

We can all agree that Artificial Intelligence has quietly become a co-designer, as it determines what we see, when we see it, and how often. But good design has always been about intention.

At Mercedes-Benz.io, we talk a lot about designing for trust. Whether it’s digital products for mobility, data systems, or communication, the question always remains the same: how do we ensure that technology helps people rather than overwhelms them?

When it comes to social media, that question becomes even more relevant. A responsible experience is one where intelligence serves clarity, where automation simplifies complexity without erasing transparency.

When designing digital experiences, we should be asking ourselves:

- Do users know their feed is personalised?

- Can they understand why certain content appears?

- Do they have the power to adjust what influences their view?

When people understand the logic behind what they see, they can interact more consciously. That is what it means to scroll smarter.

The Human Element

Every algorithm starts with a team of humans making decisions: what data to use, what outcomes to optimise, and which behaviours to reward. Every piece of content starts with intention, creativity, and emotion.

We also know that AI can replicate structure but not purpose, it can scale production but not empathy. That’s why the human layer is not just relevant, it becomes essential.

As creators, developers, and strategists, we decide what values these systems amplify. Our responsibility is to ensure that amplification leads to understanding, not noise.

The digital world isn’t something we passively exist in, it’s something we build together. And scrolling smarter is not about rejecting technology. It’s about reclaiming awareness.

Behind every recommendation, there’s a human decision. Behind every trend, there’s a pattern of choices. We may not control every algorithm, but we can define what they represent. That’s where real progress begins.

Related articles

Vera Figueiredo

When Roles Shift: A Moment of Ownership and A Lesson in Leadership

What happens when you suddenly must step up and take the lead? When the roadmap is still fuzzy, decisions can’t wait, and everyone in the room is looking to you wondering what’s next?

Oct 7, 2025

Carolina Brásio, Yusuf Ekiz

From Clean Code to Koog: KotlinConf 2025 in a Nutshell

Oct 3, 2025

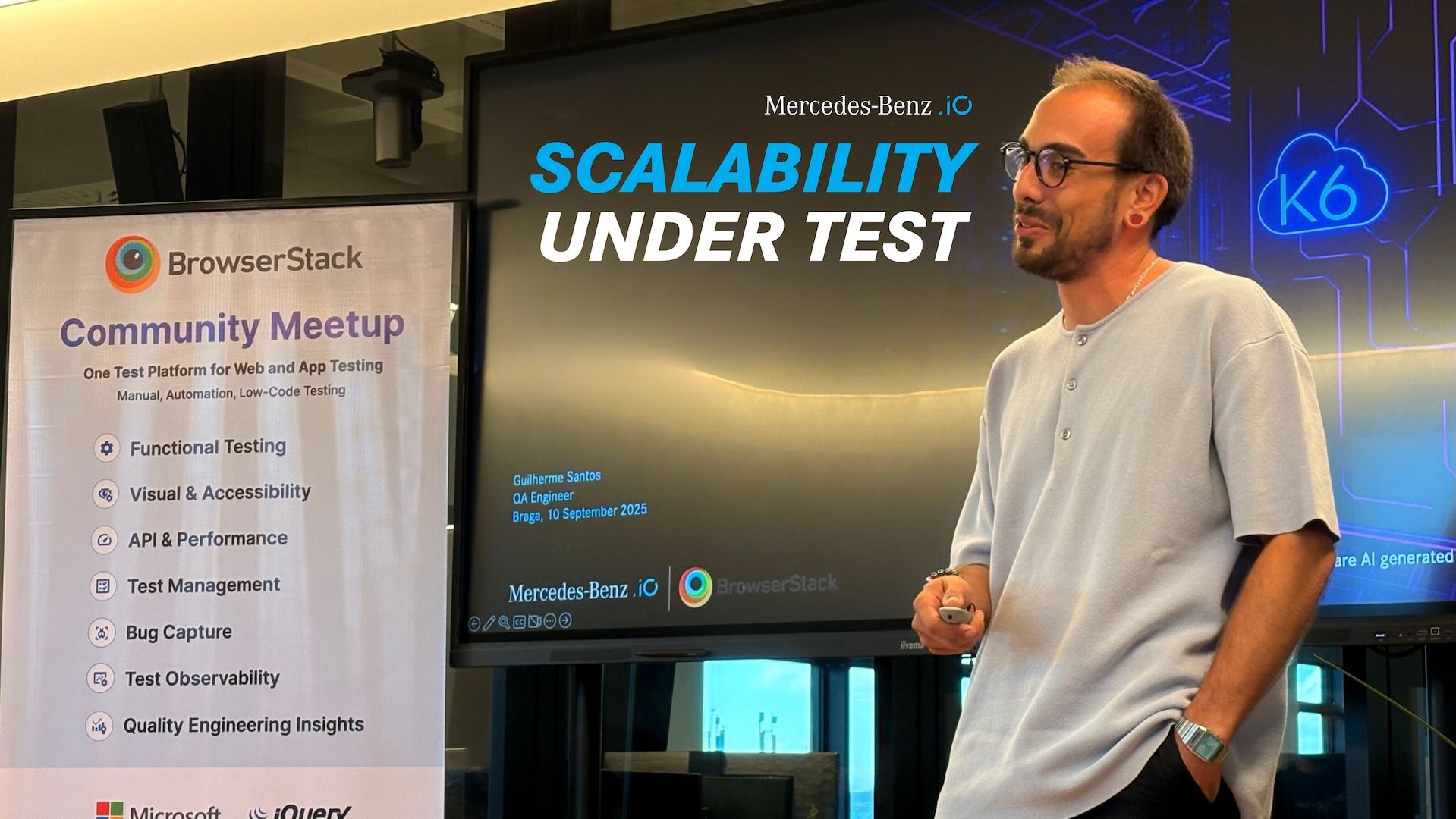

Guilherme Santos

Scalability Under Test: What Load Testing Can’t Miss in 2025

On September 10th, 2025, Guilherme Santos had the chance to take the stage at the BrowserStack QA Meetup, hosted at the Mercedes-Benz.io office in Braga, to talk about something every tech team faces sooner or later: performance under pressure.

Sep 30, 2025