The Guardrails Your LLM Needs: Reliable Agent-Based Systems

Large Language Models (LLMs) are a type of AI system trained to understand and generate human language. They're powerful - but also unpredictable. Without clear structure, they can misinterpret context, invent facts, or act opaquely. That's why, at Mercedes-Benz.io, we're exploring how to design systems that don't just use LLMs but govern them. What follows is how we're doing that - and why it matters.

Artificial Intelligence systems are often celebrated for their potential, their ability to generate, summarize, predict, and learn. But in practice, LLMs still come up with major challenges: lack of contextual understanding, hallucinated outputs, and opaque decision-making paths.

At Mercedes-Benz.io, we've been experimenting with new ways to tackle these issues by designing agentic systems, structured, traceable, explicitly governed AI pipelines. And since Kotlin is the language of choice for server side applications, it's a natural decision to experiment it using Koog Framework by JetBrains, aligning with our goals of reliability and maintainability.

Implementation note: The solution discussed here is implemented in Kotlin using Koog's abstractions (Strategies, Tools, and Models). Thanks to Kotlin's strong typing and concise syntax, reasoning steps remain explicit and testable, while Koog also provides execution and tracing.

Contents

WHAT'S THE PROBLEM WITH USING LLMS "OUT OF THE BOX"?

In theory, LLMs offer a flexible, general-purpose interface to knowledge and language. But in real-world applications, flexibility can become unpredictability.

There are four recurring problems:

- Contextual understanding gaps: LLMs may understand syntax, but they often fail to track task-specific context across complex workflows.

- Hallucinations: LLMs generate confident but false statements, which can't be trusted in critical scenarios like scheduling, legal advice, or system decisions.

- Lack of validation: Without external validation or constraints, outputs can't be guaranteed to reflect real-world logic or business rules.

- Unstructured behavior: LLMs behave like unbounded functions, and without structure, we have no meaningful way to govern or trace their decisions.

We needed a different approach.

BUILDING A SYSTEM WITH INTENT

That's where the Agentic approach steps in. Instead of relying on a single LLM prompt, we define the system as a collection of agents, each with its own Strategies, Tools, and roles. Together, these agents form a coordinated reasoning system.

This is what Koog helps us build: structured AI workflows, designed with modularity, traceability, and human-defined logic control.

In short: we don't just ask the model what to do. We define how it should think.

INTRODUCING KOOG: A FRAMEWORK FOR AGENT-BASED AI

Koog lets us design and run Agentic AI systems reliably.

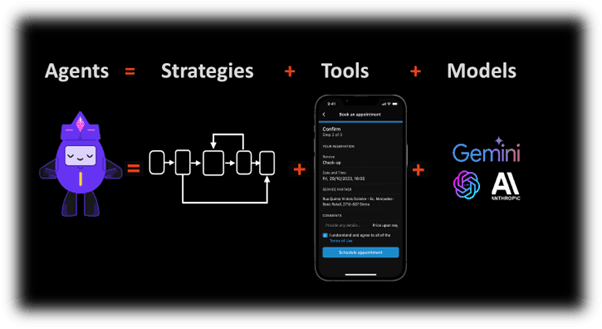

Each system is composed of three main layers:

- Strategies: High-level reasoning paths. These help us define the workflows, validations, rules, and ultimately achieve our main intent of our business needs.

- Tools: Interfaces the agent can call - APIs, validators, data fetchers, etc.

- Models: The actual LLMs that, based on our strict instructions, can execute Tools and help us generate a natural and fluent human language (to interact with real people) or even machine languages (to interact directly with systems in a structured way).

Koog supports multiple LLM providers, including OpenAI, Anthropic, Google, and Ollama, giving us flexibility without vendor lock-in (we can even provide our own). It also offers traceability for tracking AI processes. LLM compression history to avoid sending unnecessary data to LLMs. Built-in Memory capabilities that let AI agents store, retrieve, and use information across conversations. MCP integration. A2A (Agent-to-Agent Protocol) is a standardized protocol for communication between AI agents. And much more...

A USE CASE: BOOKING A VEHICLE SERVICE MAINTENANCE

Let's take a real Aftersales use case:

Scheduling vehicle service maintenance at a Mercedes-Benz dealer

On the surface, it sounds simple. But it requires reliable access to:

- Accurate vehicle data.

- A verified list of dealers.

- Realistic appointment availability.

- Calendar validation for both parties.

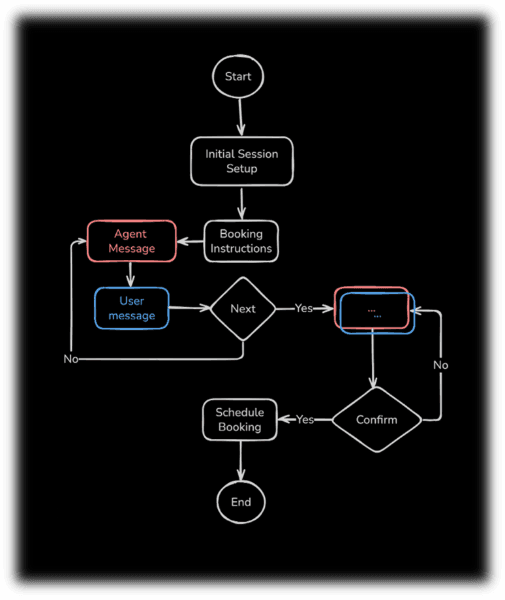

An unstructured LLM might guess or invent data. With Koog, the workflow is explicit and traceable, and the model can't fabricate answers without hitting constraints. We decompose the problem across strategies:

- Understand the user's request

- Fetch verified vehicle info

- Query available dealers

- Match calendars for availability

- Run booking creation validations

- Compose a summary of the booking

- Request user confirmation to proceed

- Submit the request

Here is a very basic example of an Agent strategy:

We decide if we can move forward at any point in the workflow, and we will only move forward if we have got what we need.

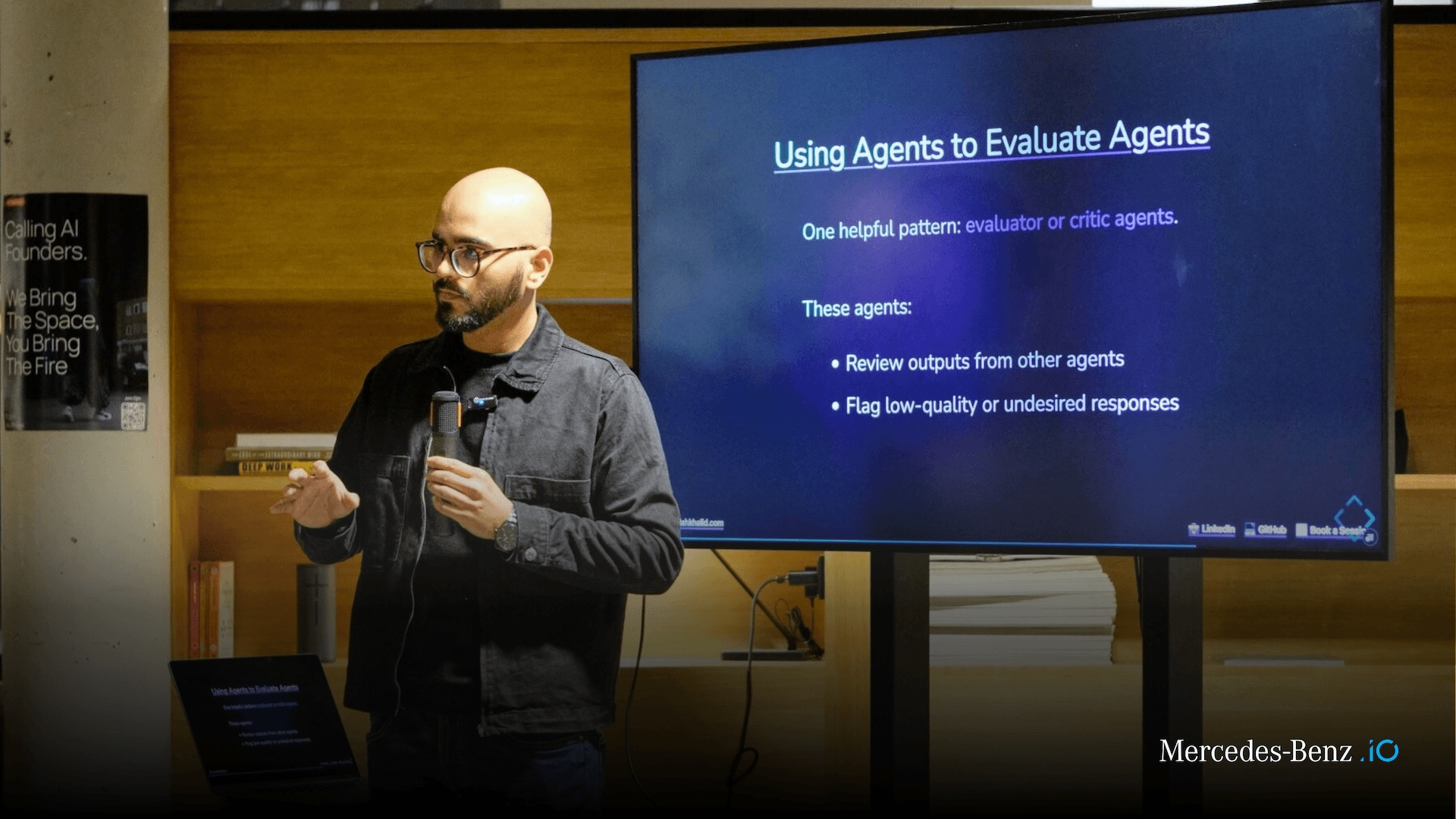

WHY MODULARITY AND TRANSPARENCY MATTER

One of the key advantages of Koog is its modular design. We can extend the system by adding new Strategies, swapping in different Models, or integrating new Tools - without rewriting everything from scratch.

It also allows us to enforce transparency across the AI lifecycle. We know what the system is doing, when, and why. We can inspect intermediate reasoning, tweak behavior, and avoid black-box decisions, which is essential for trust.

Before Koog, we couldn't predict what the system would do. But now we enforce the guardrails on the system that makes everything predictable.

FINAL THOUGHTS

If you want reliability from AI, you can't just hope the model will behave. You have to design behavior.

That's what the Agentic Approach gives us, not just outputs, but structure. Not just intelligence, but accountability.

Our implementation in Kotlin together with Koog Framework, provides the building blocks for modular, testable, and observable AI systems. Koog is evolving quickly and already behaves well in our experiments. We're exploring how far it can scale and the foundations are there: a system that doesn't just generate, but reasons with purpose, structure, and control.

For more technical details, visit Koog's documentation: https://docs.koog.ai/.

Related articles

Asad Ullah Khalid

From Curiosity to Architecture: Asad’s Journey into Real‑World Multi‑Agent AI Systems

Artificial Intelligence (AI) is evolving rapidly, moving far beyond simple prompt‑response interactions toward systems capable of planning, acting and collaborating with increasing autonomy. At Mercedes‑Benz.io, our MB.ioneers explore how these capabilities shape the future of digital products, architecture and engineering practices.

Feb 27, 2026

Vanessa Costa

Behind the Engine: How the Mercedes Virtual Assistant Is Transforming Online Vehicle Shopping

Welcome to the third edition of our Behind the Engine series, where we take a closer look at the digital products powering the Mercedes‑Benz experience. This time, we’re stepping into the world of conversational commerce to explore a product that’s changing how customers search for and discover vehicles online: the Mercedes Virtual Assistant (MVA).

Feb 24, 2026

João Pedrosa

Beyond the Screen: Navigating the Shifting Landscape of Mobile

If there's one thing Mobile Engineering teaches, it's that the ground is always moving. For João Pedrosa, working in mobile means navigating a setting where tools evolve overnight, platforms reshape themselves frequently, and users expect more with every tap. What appears simple on the surface hides a complex world of design, architecture, collaboration, and continuous learning.

Feb 20, 2026